Airwaves to Data Lake in 60 Seconds!

by Paul Symons ©Contents

Summary

In my last blog, Capture And Upload Data With Vector, we covered some examples of using Vector to read, transform and send data to AWS S3.

This blog will take that idea further, using Vector, Amazon Data Firehose and Amazon S3 Tables to write it direct to iceberg format tables, ready for querying in your data wakehouse.

All code created for this blog is available in the flight-tracking-readsb repository.

Architecture

From top to bottom, this architecture describes:

- A USB software defined radio being used with readsb console application to capture ADS-B aircraft transmissions as JSON documents

- Vector used as a data pipeline, to transform and ship data from the laptop to AWS Data Firehose

- If records sent cannot be delivered, they will be delivered by the Firehose to a bucket used specifically for failed records

- Successful records will be inserted into an Iceberg table hosted on AWS S3Tables

- The S3Tables iceberg table is federated and accessible to AWS Analytics services such as Athena via LakeFormation.

If you prefer not to use AWS Analytics services to query your data, you can instead use the S3Tables Iceberg Rest Catalog.

Prerequisites

Unfortunately the S3Tables Destination for Amazon Data Firehose currently has a hard dependency on LakeFormation, even though your delivery stream is configured with a service role that could use IAM permissions to permit fine grained table operations. If you don’t want to use LakeFormation, then unfortunately this is not the solution for you.

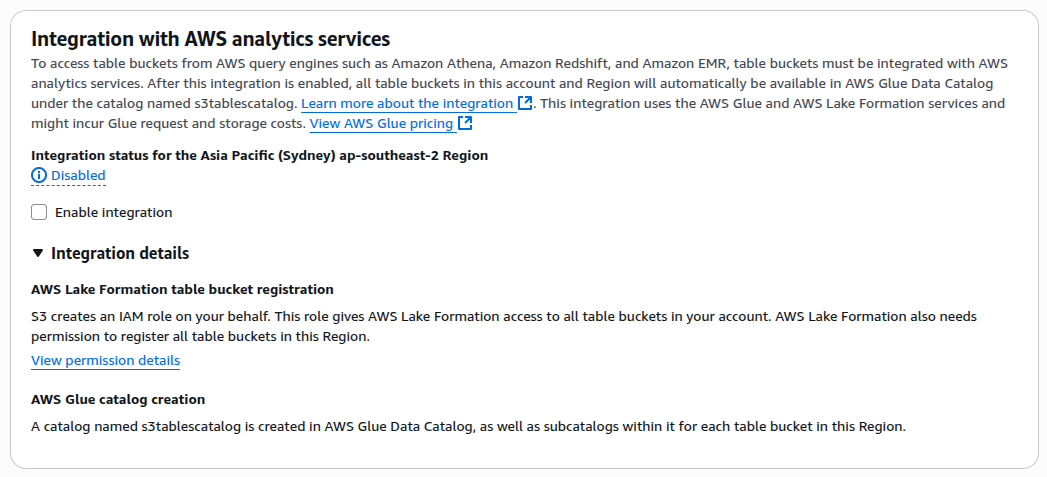

To make LakeFormation aware of S3Tables as a catalog, you must tickops the Enable Integration setting. You can’t miss this prompt; it nags you all the way through the S3 Table Buckets console until you acquiesce to it.

Once you have done this, each Table Bucket you create will be available as alternative catalogs in services such as LakeFormation, Athena, etc. The default catalog remains the the original Glue Catalog, which is named after your AWS Account Number.

AWS Infrastructure

We will stand up the AWS Infrastructure using Terraform.

S3Tables: Bucket, Namespace and Table

Creating these resources is straight forward:

resource "aws_s3tables_table_bucket" "flight_tracking" {

name = var.flight_tracking_bucket_name

encryption_configuration = {

sse_algorithm = "AES256"

# shouldn't need to specify the following as null,

# but terraform complained otherwise

kms_key_arn = null

}

}

resource "aws_s3tables_namespace" "adsb" {

table_bucket_arn = aws_s3tables_table_bucket.flight_tracking.arn

namespace = var.flight_tracking_namespace

}

resource "aws_s3tables_table" "aircraft" {

table_bucket_arn = aws_s3tables_table_bucket.flight_tracking.arn

namespace = aws_s3tables_namespace.adsb.namespace

name = var.flight_tracking_aircraft_table

format = "ICEBERG"

metadata {

iceberg {

schema {

field {

name = "_adsb_message_count"

type = "int"

required = false

}

...

}

} I like that you can configure the schema for your table in Terraform, however, unfortunately it does not support defining partitions at this time.

Previously, the glue_catalog_table Terraform Resource, and the AWS Glue create_table API also suffered from this shortcoming, but both now appear to support partition definition. Hopefully the S3Tables Create Table API will catch up soon.

Delivery Errors Bucket

The Terraform code includes the definition of a regular S3 bucket to receive delivery errors. When configuring the Data Firehose, you can choose whether to send only failed records, or simply all records to the delivery errors bucket - you might want to do this to satisfy audit requirements, for example.

Configuration of the delivery errors bucket is mandatory - without it you cannot create the Firehose delivery stream. It turns out to be pretty useful for catching 2 distinct kinds of problems

- Your configuration is incorrect (e.g. Lakeformation permissions, Firehose Role inadequacies)

- Your data differs from the Iceberg table definition (e.g. additional data, or conflicting or unsupported types)

Improving the accessibility of delivery errors will make your life much easier, so in the repository we define an external table (not iceberg) that points to where the delivery errors are stored; this allows you to simply query the errors with Athena to debug the cause of your misfortune.

Note that the actual payload is included - it is base64 encoded, so if you want to read the payload you’ll need a query like

SELECT

FROM_UTF8( FROM_BASE64(rawdata) ) AS payload

FROM iceberg_stream_errorsAmazon Data Firehose

Once you have all the supporting measures in place - roles, buckets, log groups etc - defining the delivery stream itself is rather straight forward.

resource "aws_kinesis_firehose_delivery_stream" "iceberg_stream" {

name = local.firehose_name

destination = "iceberg"

iceberg_configuration {

cloudwatch_logging_options {

enabled = true

log_stream_name = local.log_stream_name

log_group_name = aws_cloudwatch_log_group.firehose_logs.name

}

role_arn = aws_iam_role.firehose_role.arn

# this is the fully qualified catalog arn for your s3tables

# table bucket, which you have to construct yourself, e.g.

# "arn:aws:glue:${region}:${account}:catalog/s3tablescatalog/${var.flight_tracking_bucket_name}"

catalog_arn = local.catalog_arn

buffering_interval = 60

destination_table_configuration {

database_name = aws_s3tables_namespace.adsb.namespace

table_name = aws_s3tables_table.aircraft.name

}

s3_backup_mode = "FailedDataOnly"

s3_configuration {

bucket_arn = aws_s3_bucket.bad_records_bucket.arn

role_arn = aws_iam_role.firehose_role.arn

error_output_prefix = "${local.firehose_name}/"

}

}

}If you decide you do want to capture all of your data events to the regular S3 bucket, modify the

s3_backup_mode

parameter to the value AllData instead of FailedDataOnly.

The Amazon Data Firehose service does a lot of pre-validation prior to letting you create a delivery stream. So whilst it’s straight forward to configure a delivery stream, ensuring the delivery stream’s role policy is correct is critical. Consider the role definition in the attached repository a minimum viable policy.

Configuring Vector

Once your Firehose is setup and running, configuring Vector is very easy. Simply take the name of your Firehose stream and add it as a new sink - Vector will do the rest:

sinks:

my-firehose:

type: aws_kinesis_firehose

inputs:

- unnested

stream_name: iceberg-stream

encoding:

codec: json

batch:

# send buffered data after one minute of not sending anything

timeout_secs: 60

region: ap-southeast-2Considerations

Lakeformation: Terraform Support for S3TablesCatalog in Permissions

No shade on Terraform here - they had support for S3Tables at launch and my anecdotal experience is that the project does a great job of keeping up with changes to the hundreds of AWS APIs.

The “AWS Analytics Services Integration” mentioned previously makes

S3Tables Table Buckets available as a new form of Glue Catalog. This is

an important change, because for the last 5 years or so, the only valid

CatalogId for LakeFormation permissions would have been a 12 digit AWS Account ID.

The new integration changes that by allowing CatalogId in the the following form:

123456789012:s3tablescatalog/<table-bucket-name>

An issue exists, and a pull request has already been prepared; this issue will likely disappear very soon.

LakeFormation is mandatory and requires special permissions

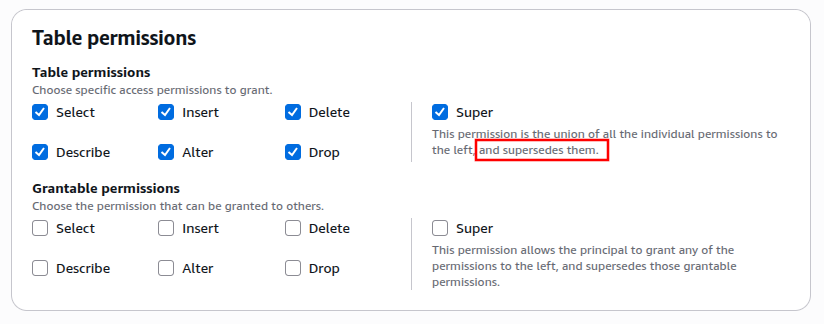

When granting permissions to the Firehose role to update your S3Tables table, you have no

choice but to grant it Super (or ALL) permissions.

Ideally, I’d prefer to grant it SELECT, INSERT, and DESCRIBE. But even if you tick every single permission,

Firehose will raise an error, saying that it does not have access to update your table. Your only remedy is to grant

it the Super permission.

I personally don’t like the idea that the Firehose role would have the DROP permission on my table, and for this reason

I would not use this feature in a production workload. Unlike Hive tables in the Glue Catalog, which are external tables,

Iceberg tables are managed tables, meaning that if your table is deleted, the data is also deleted.

Compression

As far as I can see, it is not possible to send (e.g. gzip) compressed data to a Data Firehose delivery stream that has an S3Tables Iceberg destination.

When data is priced on volume of bytes ingested, this can make a considerable difference, given it is not uncommon to achieve 5x reduction in size using even gzip compression.

Cost

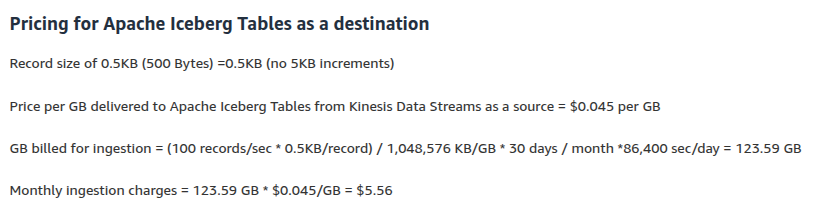

I was surprised to learn that Amazon Data Firehose pricing for Iceberg destinations is a little different, and simplified. Instead of worrying about 5kb increments of data which is common in other Firehose destinations, a flat fee per GB ingested is charged. This makes it easier to reason about the cost for ingestion, and I appreciate this.

Something to keep front of mind is that Firehose is just one part of your cost. You also have S3Tables costs for storage, table maintenance, etc. - OneTable’s article on S3Tables pricing did a good job of calling this out, and not long after it was published, AWS actually revised their pricing downwards.

The irony is that Iceberg was designed for petabyte scale volumes of data. If you take the pricing example from AWS as an example:

It would take around 23 years to store 1 petabyte.

A more realistic example that would reach a petabyte in four months would aim to ingest at around 100MiB per second. To supply the Firehose with this volume of data would require many shards in a Kinesis Data Stream which would itself cost around $1,500 per month.

The corresponding monthly Firehose cost for data ingest - around 250GiB - would be over $23,000.

When working at this scale, you want to consider all of your costs, not just the service costs. And if you actually plan to send 100MiB per second to AWS, you might want to phone ahead first.

Conclusion

Thanks for reading about my favourite AWS Service that I rarely use in production!

The following references were helpful to set up the Delivery Stream to Iceberg:

- https://docs.aws.amazon.com/firehose/latest/dev/controlling-access.html#using-s3-tables

- https://docs.aws.amazon.com/firehose/latest/dev/retry.html

- https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-integrating-firehose.html#firehose-role-s3tables

- https://aws.amazon.com/blogs/storage/build-a-data-lake-for-streaming-data-with-amazon-s3-tables-and-amazon-data-firehose/

- https://docs.aws.amazon.com/AWSCloudFormation/latest/TemplateReference/aws-properties-lakeformation-permissions-databaseresource.html